In supermodel a single model might be broken down into say 4 different meshes. They all have the same model matrix so this is only passed once. The shader class caches all the shader uniforms so only the differences are sent to the GPU. Ie if the only difference in the meshes is the texture ID, only that is updated with a call to glUniform.

If you don't care about quad rendering you could probably pass the entire 7 word header and pass it directly to the GPU as an integer attribute. Then extract the relevant bits and do everything in the shader.

Model 3 Step 1/1.5 and Step 2 Video Board Differences

Forum rules

Keep it classy!

Keep it classy!

- No ROM requests or links.

- Do not ask to be a play tester.

- Do not ask about release dates.

- No drama!

Re: Model 3 Step 1/1.5 and Step 2 Video Board Differences

quite interesting, thanksBart wrote: ↑Thu Apr 24, 2025 10:46 pm I don't know if Pro-1000 operates in passes per se but there is this concept of a contour texture. Alpha blending also works entirely differently than expected. One theory is that it actually renders only every other pixel and then biases the rendered pixels toward visible or black. See here.

and that's exactly why I'm asking questions here

it should be based on something Sega already familiar with.

why? I'd say it looks familiar in general concepts, with the difference Hikaru have it in the form of "command list" for GPU, which is probably transformed by "Command Processor" to the form of more low level data for next ASIC which will do actual rendering.

okay, but if you replace words "CALL" and "JUMP" with "Node link", and "RET" with "return to previous level node" it will be exact same tree-leaf graph structure as you described, isn't it?Bart wrote: ↑Thu Apr 24, 2025 10:46 pm The command list you linked looks like a set of instructions for the GPU to execute. Pro-1000 doesn't have that concept. The display lists aren't arbitrary lists of commands to follow. They're a data structure that is traversed. At the high level, there is a linked list of viewports to render. Each viewport is described by the exact same struct (which sets lighting, fog, and other parameters) that points to a list of top-level nodes to render. Each of these is a hierarchical set of culling nodes that terminate in a pointer to a model to render (stored in polygon RAM or in VROM). And each node can, IIRC, point to yet another list. So it's a tree-like structure of nodes and lists of nodes. Each time you go one node deeper, you apply a transform matrix (stored elsewhere and referred to by its index), which translates pretty directly to the OpenGL matrix stack.

It's a scene graph: each node basically specifies how to transform the children below it. And at the very end of this chain is the thing to render with those transforms applied. There is also bounding box information and LOD information (up to, I believe, 4 different models to select from depending on distance and view angle) for culling invisible models and switching to lower-fidelity ones.

of course the way of how Hikaru retrieving display list is totally different than Model3. it can be anywhere in PCI space, and even be in different RAMs of that space, usually it starts in PCI bridge chip's local RAM, but then may do "CALL"s for example to slave SH4 CPU RAM, where stored some models.

the result is presumable stored to CP's(Command Processor) local RAM (Antarctic ASIC on diagram), there is 8MB of SDRAM wired to it, which seems not accessible by main CPU since it's not checked by BIOS RAM test.

but, it should be used for *something*, and that something is not textures or 2D layers data or smth similar. so, it's probably for display list (polygons, models, etc), and of course data there will be not the same "high level" as input commands, but more close to something like M3 scene node list with headers full of magic control bits and everything like that.

as I suppose there is also may be double buffering - while CP processing one list it also transfering previous one to GPU which actually does rendering.

as of matrices - Hikaru GPU have similar thing: at top level you may setup 2 current matrices, then at next level you may multiply them by another matrix, and so on, there is also push/pop operations to preserve matrices of upper level.

TLDR: just imagine if add to Real3D 1000 some "frontend" processor, which will be read data via PCI, parse commands/vertex lists, then transform it to the form of linked node list with all these polygons headers, etc, store to local RAM, and then push it to ASIC which does actual rendering

nothing unusual here, it was the same in most of mid-late 90x GPUs, like PowerVRs and others.Bart wrote: ↑Thu Apr 24, 2025 10:46 pm But here's where things really differ from a more conventional GPU: rendering state isn't modified arbitrarily by command lists, as in Hikaru. Apart from some viewport-level things like fog and light vector, the various shading and rendering options are configured per-polygon. Every polygon in a model contains a big header consisting of 7 words (32 bits each). These specify texturing, shading, color, blending with fog, etc.

-

gm_matthew

- Posts: 47

- Joined: Wed Nov 08, 2023 2:10 am

Re: Model 3 Step 1/1.5 and Step 2 Video Board Differences

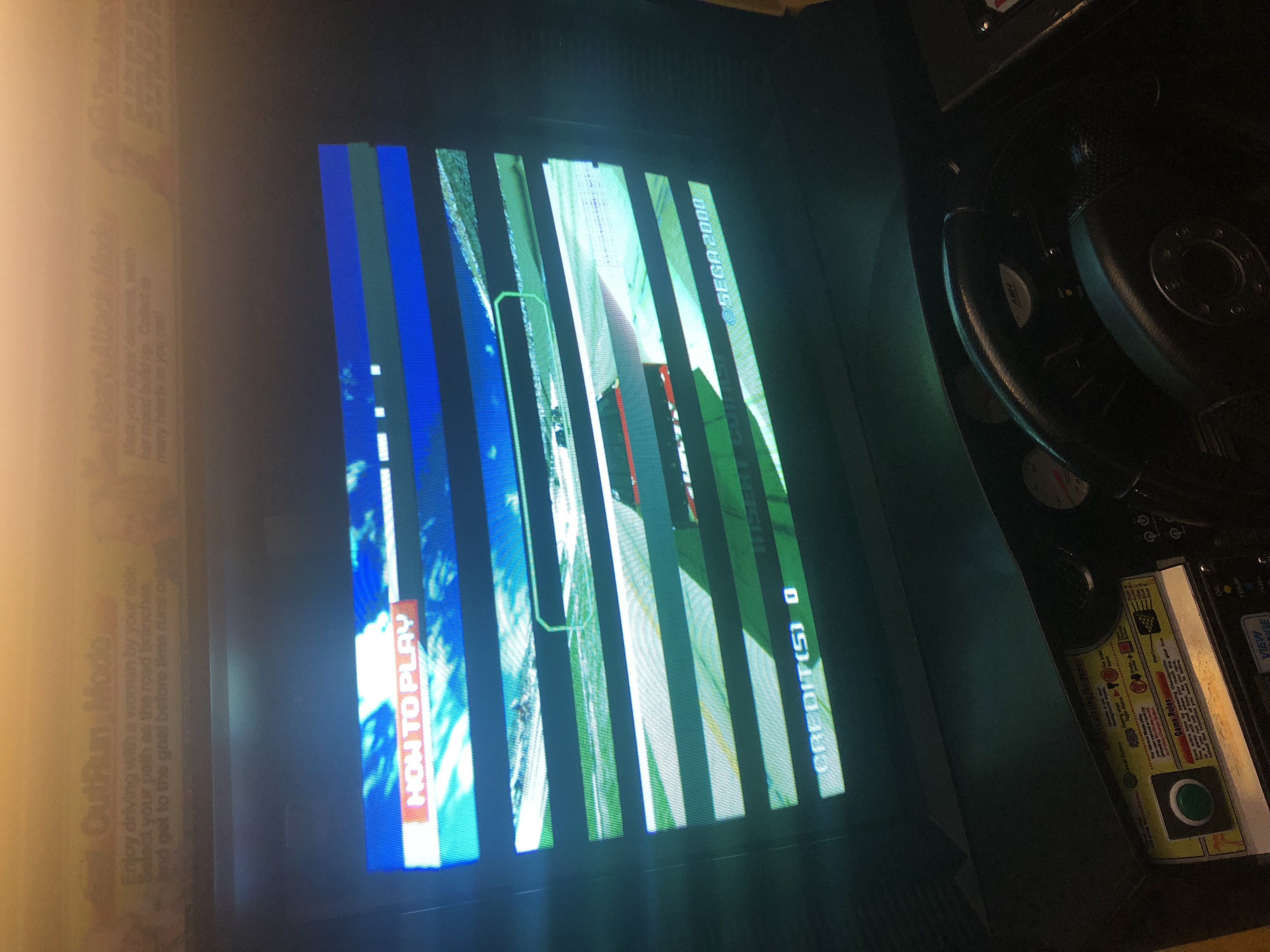

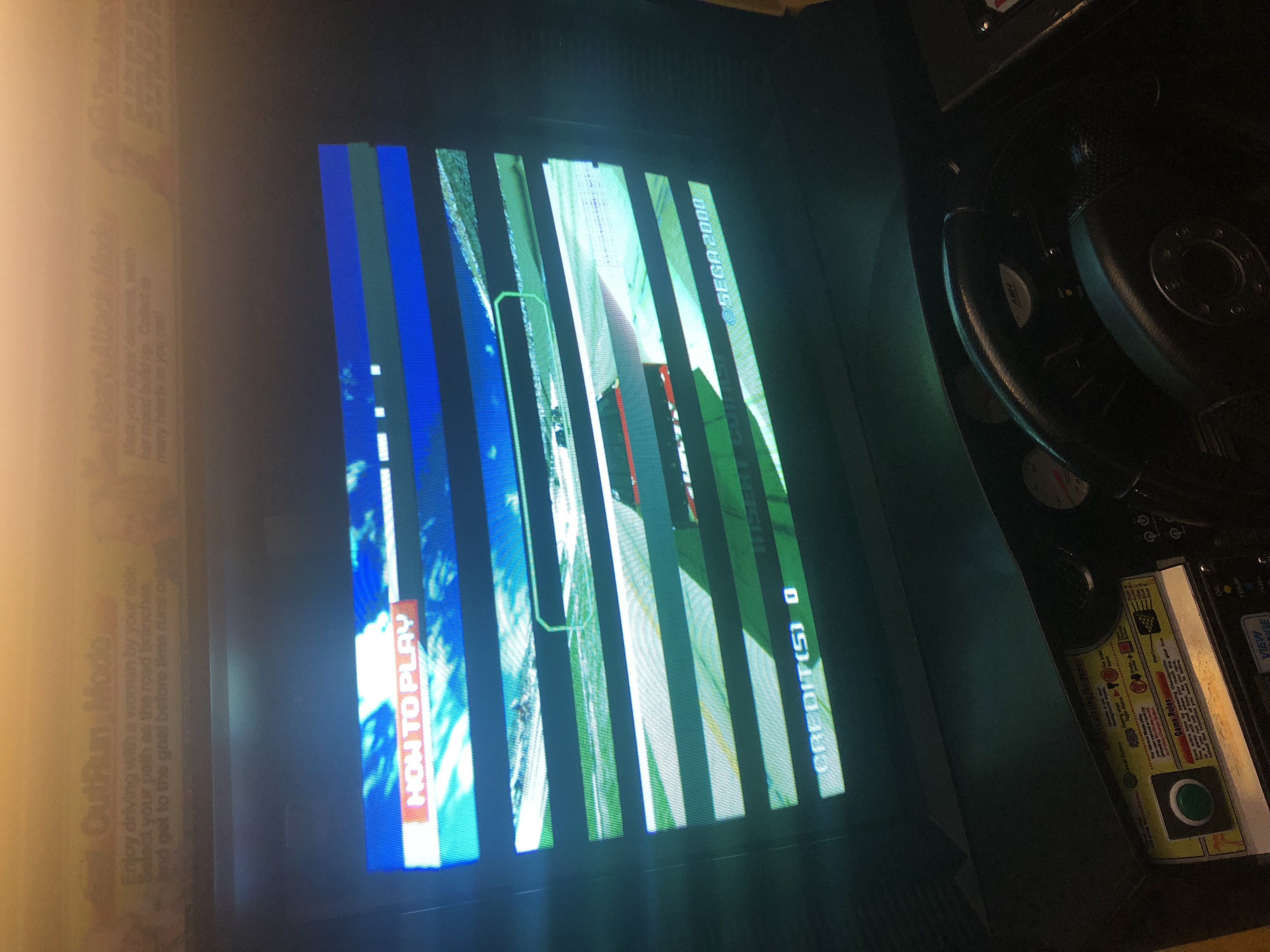

Since Hikaru seems to have become a recurring subject in this thread, I've discovered the following picture of NASCAR Arcade with at least one of the graphics chips not working properly:

There's a total of 15 stripes, and 480 / 15 = 32 lines. This means that the 3D graphics are working for the first 32 lines, then not working for the next 32 lines, then working again for the next 32, etc.

I know that Hikaru doesn't use the same PowerVR GPU that Dreamcast/NAOMI uses but it seems to me that it might be implementing its own 32x32 tile-based rendering, which would be particularly useful for Phong shading; you really don't want to perform the expensive shading calculations for pixels that ultimately don't end up on screen if you can avoid it.

If I had to guess, I'd say the "Atlantis" chips perform rasterization with the odd-numbered rows of 32x32 tiles being processed by one Atlantis and the even-numbered rows by the other Atlantis, while "America" performs shading for pixels that end up being visible on the screen. In the above picture, one of the Atlantis chips isn't working hence every other row of 32 lines doesn't render properly. Thoughts?

There's a total of 15 stripes, and 480 / 15 = 32 lines. This means that the 3D graphics are working for the first 32 lines, then not working for the next 32 lines, then working again for the next 32, etc.

I know that Hikaru doesn't use the same PowerVR GPU that Dreamcast/NAOMI uses but it seems to me that it might be implementing its own 32x32 tile-based rendering, which would be particularly useful for Phong shading; you really don't want to perform the expensive shading calculations for pixels that ultimately don't end up on screen if you can avoid it.

If I had to guess, I'd say the "Atlantis" chips perform rasterization with the odd-numbered rows of 32x32 tiles being processed by one Atlantis and the even-numbered rows by the other Atlantis, while "America" performs shading for pixels that end up being visible on the screen. In the above picture, one of the Atlantis chips isn't working hence every other row of 32 lines doesn't render properly. Thoughts?

Re: Model 3 Step 1/1.5 and Step 2 Video Board Differences

good find, thanks!

I'd say it more looks like how Voodoo SLI has been working, dividing screen in horizontal strips, while PowerVRs usually divide screen in tiles like chequered flag, you may see it on photos of Naomi 2s with one of GPUs broken.

as of "is it may be something from ImgTec?" in general:

- Texture formats

PowerVRs usually supports wide set of texture formats: 1555, 565, 4444, 8888, YUV, Bump map, 4bpp or 8bpp palettes. textures may be either regular or VQ-compressed.

Hikaru have only: ARGB1555, ARGB4444, A8L8, A4 (only used by Test mode font), I4 (unused), and that's all.

doesn't looks similar, isn't it? but it's a bit similar to small set of textures mentioned there http://textfiles.com/computers/DOCUMENT ... 3dspec.txt

- Texture addressing

PowerVRs select texture using base address in VRAM, the whole texture with optional mip levels is linear chunk of data.

Hikaru use "texture RAM" in form of x2 2048x1024pix rectangle banks. textures selected using X/Y "slot" position, where slot is 16x16pix cell.

if I not mistaken, Real3D 1000 have something similar, but 8x8pix slot/cell.

- Vertices

PowerVRs use normalized (0-1) IEEE 32bit float texture coordinates (may be cut to 16bit, dropping lower 16bits of mantissa).

Hikaru uses 16bit UVs - either 16bit integer or 12.4 fixed point depending on control bit, which really reminds Real3D 1000, isn't it?

XYZ coordinates - PowerVRs again uses IEEE 32bit floats only, Hikaru uses either floats or a bit strange format where vertex contains 22bit kind of mantissa, while exponent set by special command, again it looks similar to Real3D coords scaling coefficient but in a bit different form.

another interesting and unusual thing in vertices - so far we never seen any colors provided with them, but only alpha in vertex header, coords, normal and optional texture coords.

there is also alpha value provided in "model type (opaque, trans, etc) select" command, which looks similar to Real3D's "node alpha" with "vertex alpha".

[I may add here more differences and similarities if I'll recall them]

Hikaru also supports "Depth blend" fog-like feature, which may make objects translucent depending on depth, and I don't know any of GPUs which had such feature.

this can be seen for example here - https://youtu.be/bsh-knaeEio?t=93 at ~1:33

you may see large propellers become translucent and you may see background clouds behind them, but these propellers drawn as opaque type polygons, with both node and vertices alphas 255. so far I no good ideas how it may work, perhaps it may be implemented similar to things said in topic about how Rreal3D's anti-aliasing works?

so, summary: Hikaru GPU clearly doesn't looks like ImgTec's product, but it reminds Real3D in some small details.

I'd say it more looks like how Voodoo SLI has been working, dividing screen in horizontal strips, while PowerVRs usually divide screen in tiles like chequered flag, you may see it on photos of Naomi 2s with one of GPUs broken.

as of "is it may be something from ImgTec?" in general:

- Texture formats

PowerVRs usually supports wide set of texture formats: 1555, 565, 4444, 8888, YUV, Bump map, 4bpp or 8bpp palettes. textures may be either regular or VQ-compressed.

Hikaru have only: ARGB1555, ARGB4444, A8L8, A4 (only used by Test mode font), I4 (unused), and that's all.

doesn't looks similar, isn't it? but it's a bit similar to small set of textures mentioned there http://textfiles.com/computers/DOCUMENT ... 3dspec.txt

- Texture addressing

PowerVRs select texture using base address in VRAM, the whole texture with optional mip levels is linear chunk of data.

Hikaru use "texture RAM" in form of x2 2048x1024pix rectangle banks. textures selected using X/Y "slot" position, where slot is 16x16pix cell.

if I not mistaken, Real3D 1000 have something similar, but 8x8pix slot/cell.

- Vertices

PowerVRs use normalized (0-1) IEEE 32bit float texture coordinates (may be cut to 16bit, dropping lower 16bits of mantissa).

Hikaru uses 16bit UVs - either 16bit integer or 12.4 fixed point depending on control bit, which really reminds Real3D 1000, isn't it?

XYZ coordinates - PowerVRs again uses IEEE 32bit floats only, Hikaru uses either floats or a bit strange format where vertex contains 22bit kind of mantissa, while exponent set by special command, again it looks similar to Real3D coords scaling coefficient but in a bit different form.

another interesting and unusual thing in vertices - so far we never seen any colors provided with them, but only alpha in vertex header, coords, normal and optional texture coords.

there is also alpha value provided in "model type (opaque, trans, etc) select" command, which looks similar to Real3D's "node alpha" with "vertex alpha".

[I may add here more differences and similarities if I'll recall them]

Hikaru also supports "Depth blend" fog-like feature, which may make objects translucent depending on depth, and I don't know any of GPUs which had such feature.

this can be seen for example here - https://youtu.be/bsh-knaeEio?t=93 at ~1:33

you may see large propellers become translucent and you may see background clouds behind them, but these propellers drawn as opaque type polygons, with both node and vertices alphas 255. so far I no good ideas how it may work, perhaps it may be implemented similar to things said in topic about how Rreal3D's anti-aliasing works?

so, summary: Hikaru GPU clearly doesn't looks like ImgTec's product, but it reminds Real3D in some small details.

Last edited by MetalliC on Mon May 12, 2025 6:11 pm, edited 2 times in total.

Re: Model 3 Step 1/1.5 and Step 2 Video Board Differences

as of "how this GPU works?" - I have no clear understanding at this moment, so far my best guess is:

Antarctic fetching display list via PCI and put it into local 8MB RAM, probably it does data binning as per polygon type, viewport, priority, etc.

then next Africa ASIC does actual display list processing, 2 next Atlantis ASICs does parallel rasterization and maybe lighting math, then America does lighting/shading magic with texture data from 2 Australia texture units (each of them contain even or odd mip levels, so together they may do 1 clock fetch 2 samples for trilinear).

then rendered result stored into Eurasia's local 8MB VRAM, optionally it may be blended with some another 640x480 image from VRAM for motion blur-like effects.

then Eurasia output that to RGB DAC(s), optionally mixing with 2 2D layers from VRAM.

there is still the questions like "where is depth/stencil buffer and working render buffer?", I don't know, probably it's inside of America ASIC, or Atlantis ASICs,

here is diagram of the whole thing if you may be interested

it doesn't include more minor lines, like clock, reset, interrupt, etc lines distribution.

and btw, all GPU ASICs connected in a JTAG chain, pretty similar to Model 3

Antarctic fetching display list via PCI and put it into local 8MB RAM, probably it does data binning as per polygon type, viewport, priority, etc.

then next Africa ASIC does actual display list processing, 2 next Atlantis ASICs does parallel rasterization and maybe lighting math, then America does lighting/shading magic with texture data from 2 Australia texture units (each of them contain even or odd mip levels, so together they may do 1 clock fetch 2 samples for trilinear).

then rendered result stored into Eurasia's local 8MB VRAM, optionally it may be blended with some another 640x480 image from VRAM for motion blur-like effects.

then Eurasia output that to RGB DAC(s), optionally mixing with 2 2D layers from VRAM.

there is still the questions like "where is depth/stencil buffer and working render buffer?", I don't know, probably it's inside of America ASIC, or Atlantis ASICs,

here is diagram of the whole thing if you may be interested

it doesn't include more minor lines, like clock, reset, interrupt, etc lines distribution.

and btw, all GPU ASICs connected in a JTAG chain, pretty similar to Model 3

Re: Model 3 Step 1/1.5 and Step 2 Video Board Differences

btw, did I get it right from Supermodel source code - Model 3 same have no per-vertex colors, but only per polygon/mesh/or whatever you call group of vertices?

-

gm_matthew

- Posts: 47

- Joined: Wed Nov 08, 2023 2:10 am

Re: Model 3 Step 1/1.5 and Step 2 Video Board Differences

Yes, Model 3 has one color per polygon; is the same true for Hikaru?

If it's true that Hikaru uses two 2048x1024 texture sheets just like Model 3 does, then I might just be convinced that Real3D came up with it because pretty much everything else uploads textures as contiguous blocks of data.

The graphics chip part numbers suggest that most of them may have been designed shortly after Model 3 Step 2.x; the graphics chips on step 2.x have the part numbers 315-6057 to 315-6061 while most of the graphics chips on Hikaru have part numbers 315-6083 to 315-6087; the exceptions are the two Atlantis chips (315-6197) which still predate the PowerVR chip (315-6267) used on Dreamcast/NAOMI.

It looks to me like Hikaru was conceived around 1997 or so to be the successor to Model 3, but then the much cheaper NAOMI came along so Sega decided to only use Hikaru for games which really needed the extra power.

If it's true that Hikaru uses two 2048x1024 texture sheets just like Model 3 does, then I might just be convinced that Real3D came up with it because pretty much everything else uploads textures as contiguous blocks of data.

The graphics chip part numbers suggest that most of them may have been designed shortly after Model 3 Step 2.x; the graphics chips on step 2.x have the part numbers 315-6057 to 315-6061 while most of the graphics chips on Hikaru have part numbers 315-6083 to 315-6087; the exceptions are the two Atlantis chips (315-6197) which still predate the PowerVR chip (315-6267) used on Dreamcast/NAOMI.

It looks to me like Hikaru was conceived around 1997 or so to be the successor to Model 3, but then the much cheaper NAOMI came along so Sega decided to only use Hikaru for games which really needed the extra power.

Re: Model 3 Step 1/1.5 and Step 2 Video Board Differences

the question was about does Model 3 have per-vertex colors? (like almost all the other GPUs). but it looks it doesn't, same as Hikaru.gm_matthew wrote: ↑Thu May 15, 2025 1:01 am Yes, Model 3 has one color per polygon; is the same true for Hikaru?

as of one color per polygon - yes and no, Hikaru implements typical late 90s fixed function T&L with "material", "lights", "texture heads" etc type objects, while Real3D 1000 / Model 3 is more archaic early-mid 90s device and have almost everything in polygon header. Hikaru's materials have several colors, but they are mostly not understood, currently we use only one as diffuse and ignore others. see next msg.

yes, Hikaru uses 2048x1024 "texture sheets" banks, 2 of them.gm_matthew wrote: ↑Thu May 15, 2025 1:01 amIf it's true that Hikaru uses two 2048x1024 texture sheets just like Model 3 does, then I might just be convinced that Real3D came up with it because pretty much everything else uploads textures as contiguous blocks of data.

in general it is not something totally unique, iirc PlayStation 1 GPU uses same concept.

sadly it's almost nothing known about Hikaru development. all is what known for sure - this platform was released 1999/7/12 (July 1999) as said in Brave Firefighters game ROM header. everything else is speculation.gm_matthew wrote: ↑Thu May 15, 2025 1:01 am The graphics chip part numbers suggest that most of them may have been designed shortly after Model 3 Step 2.x; the graphics chips on step 2.x have the part numbers 315-6057 to 315-6061 while most of the graphics chips on Hikaru have part numbers 315-6083 to 315-6087; the exceptions are the two Atlantis chips (315-6197) which still predate the PowerVR chip (315-6267) used on Dreamcast/NAOMI.

It looks to me like Hikaru was conceived around 1997 or so to be the successor to Model 3, but then the much cheaper NAOMI came along so Sega decided to only use Hikaru for games which really needed the extra power.

as of Dreamcast/NAOMI: in short, it was most active developed during 1998, design of HOLLY chipset was finalized late summer 1998, from early autumn 1998 Videologic/ImgTec started work on ELAN T&L for Naomi 2.

in about same time, end summer - early autumn, Hitachi has been finishing work on SH4 CPU, Katana Set 4 proto/devbox documentation from August 1998 have list of SH4 CPU bugs in this device. they was fixed until November 1998 Japanese Dreamcast release.

obviously, they was unable to create Hikaru any earlier, until all the work on SH4 CPU was done and all it's bugs was fixed.

but, as you noticed, almost all the GPU ASICs have quite small part numbers, so yes, it's possible they was created during 1997-98 as standalone PCI GPU or for some other project, but later Sega decided to build SH4-based hardware with it.

Re: Model 3 Step 1/1.5 and Step 2 Video Board Differences

here is short summary of Hikaru GPU command set, so you may see which objects and attributes it operate.

it's also may worth to check Stefano's description https://github.com/stefanoteso/valkyrie ... -cp.c#L146

Code: Select all

Command set summary:

xxxxxxxx xxxxxxxx xxxxMMMT TTLLOOOO

O - operation class

0 - NOP

1 - SetReg

2 - Branch

3 - Select

4 - Create

5 - Test

6 - Etc

7 - Reserved (invalid)

8-F - Render Primitive

L - command packet length, 4<<LL bytes

T - object or operation type

set register:

0 - view port

0 - fov x/y/depth

1 - center, cliprect

2 - znear, zfar, 3bit priority?

3 - "depth queue" (fog) mode, RGB (only used in modes 0/1), x2 coefficients

4 - ambient RGB

1 - light

0 - ambient RGB and "strenght" coefficient

1 - attenuation type (no, linear, reciprocal 1&2, square) and coefficients

2 - type (inf, point, spot, constant) and spot coefficients

2 - material

0 - polygon0 RGB

1 - polygon1 RGB

2 - specular RGB and "shininess" coefficient

3 - material RGB

4 - flags: 2bit mode (shading/lighting enable), zblend(fog), use texture, alpha mode (seems flip 0/1 color), highlight mode

5 - blend mode (2bit)

6 - alpha threshold idx

3 - texhead

0 - unknown, 4 mode/idx bits (usually 1 or 0) and 8bit value, possible mip lod bias etc control

1 - flags: uv 12.4/16.0, unknown, 3bit width, 3bit height, wrap u, wrap v, mirror u, mirror v, 3bit format, 3bit mode (decal,replace etc, top bit function is not clear)

2 - texture location: bank, slot x, slot y (in 16x16pix units)

3 - uv "scroll", 0-3 idx and 2 16bit values

4 - polygon

0 - seems depth bias (not really understood)

1 - ? used but arguments always 0, possible lights related

2 - unknown, 5 bit value, usually used on shadows. depth stencil or "layered polygons" related?

3 - ? unused

4 - fixed point vertices coords scale exponent

5 - ? unused

5 - matrix / vector

0 - 4x3 matrices upload, and add/mul operations with them

2 - vector for test commands

4,5 - light position/direction

1,3,6 - unused / unknown

6 - frame buffers

0 - back to front blend enable and coefficent

1 - buffers 0 and 1 address

2 - buffers 2 and 3 address

3 - front&back buffers idx and pixel format

4 - front buffer clear RGBA

select:

0 - view port

1 - light group

2 - material

3 - texhead

4 - polygon type and alpha

create:

0 - view port

1 - light group

2 - material

3 - texhead

4 - light

5 - alpha threshold

6 - penumbra map (for spot lights)

branch: (absolute or relative, may be conditional, conditions mostly not understood)

0 - jump

1 - call

2 - ret

7 - stop/kill

test:

0 - distance test

1,2 - not clear

etc:

0 - push matrix

1 - pop matrix

M - mode bits or register for SetReg

x - optional command specific or unusedRe: Model 3 Step 1/1.5 and Step 2 Video Board Differences

This is pretty fascinating. I've not heard of Real3D developing anything after the Pro-1000. I believe work did start on a successor architecture, though, but no one has ever mentioned it being connected to Sega or seeing the light of day in any form. Who else might have developed this chip? Could it just have been some custom chip by a Japanese vendor?